There are a number of cloud and Kubernetes cost optimization tools in the market today. However, average Kubernetes cluster utilization in the public cloud is still very low—typically less than 30%. This is the primary source of waste and accounts for 50% or more of cloud compute spend.

Elastic Machine Pool (EMP) handles EKS cost optimization in a unique way. Designed for DevOps/FinOps teams, Elastic Machine Pool delivers automated EKS cluster efficiency and cost savings – powered by virtualization and live rebalancing of cloud compute resources – with no app disruptions or changes to app configuration, so no more negotiations needed with your engineers.

In our initial testing of EMP in a customer environment, Kubernetes utilization improved from 22% to 60% and corresponding AWS infrastructure costs went down by 50%.

The EKS utilization conundrum

As they develop and test their applications, developers identify the minimum and maximum resources needed by the application and then configure ‘requests’ and ‘limits’ on application pods. Kubernetes/EKS uses that information to schedule pods onto different nodes, leveraging auto-scaling technology to add new nodes, if needed, to accommodate these pods.

However, real-world usage differs greatly from those requests, with significantly more idle time. This always results in inefficient resource utilization, and most EKS users rarely achieve 25% utilization.

EKS clusters – specifically with the use of auto-scaling technologies and fixed-size nodes – introduce additional challenges, further exacerbating the utilization problem.

The sizing problem

Pods within EKS clusters often misalign with available EC2 sizes. For a new pod that requires 2.5GB RAM, the autoscaler ends up using an instance of size 4GB because the closest available EC2 sizes are 2GB and 4GB.

Fragmentation over time

This is sometimes referred to as the bin-packing problem. The dynamic nature of adding and removing pods causes fragmentation, resulting in a suboptimal node allocation and resource waste within EKS.

Improper resource settings

To ensure Quality of Service (QoS), app developers set very high requests and limits; in some cases, developers set resource requests equal to the limits. Because average usage is typically lower than peak usage, a large portion of resources go unused.

Siloed teams

In an organization, the FinOps team owns costs, the DevOps team monitors utilization and owns resource optimization, and AppDev is responsible for suggesting resource configuration, i.e. set requests and limits. This organizational structure hinders improvements in utilization and/or cost optimization.

Change resistance

There is a conflict between the need for AppDev teams to guarantee application SLA (controlled by app resource configuration) and the DevOps team’s desire to right-size the infrastructure, resulting in sub-optimal configuration.

App disruptions

Even when everyone agrees on the best configuration, applying changes may cause minor or major disruptions in the application. Various tools recommend right-sizing instances, which results in pod restarts, which in turn can cause disruptions, application downtime, and performance hits.

Dynamic environments

In a world of rapidly changing software, usage patterns shift frequently, rendering occasional optimizations quickly obsolete. There is no automated feedback loop to account for ever-changing conditions. As a result, the resource configuration is updated infrequently, leaving unrealized gains.

EKS cost optimization tools and their shortcomings

All of the above pain points are well known, and various attempts have been made to address them using the approaches listed below:

Pod sizing

A typical solution involves observing the pod’s behavior and determining the appropriate ‘request’ and ‘limits’. Some open-source tools, such as VPA, will either report or apply the recommendations. Various commercial tools offer similar capabilities, with some being more accurate, more performant, or a combination of the two.

Instance right-sizing and bin-packing

Instances hosting pods may have unused capacity due to pod resizing or the dynamic nature of the workloads. Certain tools, such as Karpenter, can right-size the instances and shuffle the Pods around to provide the best pod-to-VM placement.

Some tools provide one or the other, while others provide both. They are frequently combined with visibility into AWS cost savings. While the solutions mentioned above are good and solve a portion of the problem, they leave much to be desired. Here are a few issues we have encountered.

Pod disruptions can cause downtime

Pods may restart if they are moved between nodes due to bin-packing optimization. Even dynamic resource adjustments can cause pods to restart. Not all applications use a 12-factor methodology or are completely stateless. Such applications are unable to handle forced restarts and outages.

Many enterprise apps that cache a large amount of data in memory and databases experience downtime when pods are reconfigured with a different resource configuration. Downtime can range from several seconds for simple apps to 5+ minutes for large monolithic apps (some applications may have a long startup time). For mission-critical systems, any disruption is unacceptable and may require an explicit downtime window to execute bin-packing and/or resizing.

Pod usage is dynamic

Pods have processes that may have periods of inactivity followed by periods of heavy usage. This makes right-sizing them impossible. Static request and limit configuration is not a practical solution. Existing solutions rely on horizontal scaling or make suboptimal decisions for pod sizing, which does not solve the utilization problem completely. So even if pods are perfectly packed, the utilization at the AWS instance/hardware level remains poor, as apps seldom fully utilize the provisioned capacity.

EMP optimizes EKS costs with dynamic auto-sizing

Platform9’s Elastic Machine Pool (EMP) is an innovative new product specifically designed to target the core inefficiencies of EKS, i.e. low utilization. EMP tackles inefficient usage by leveraging hypervisor virtualization capabilities unavailable on AWS.

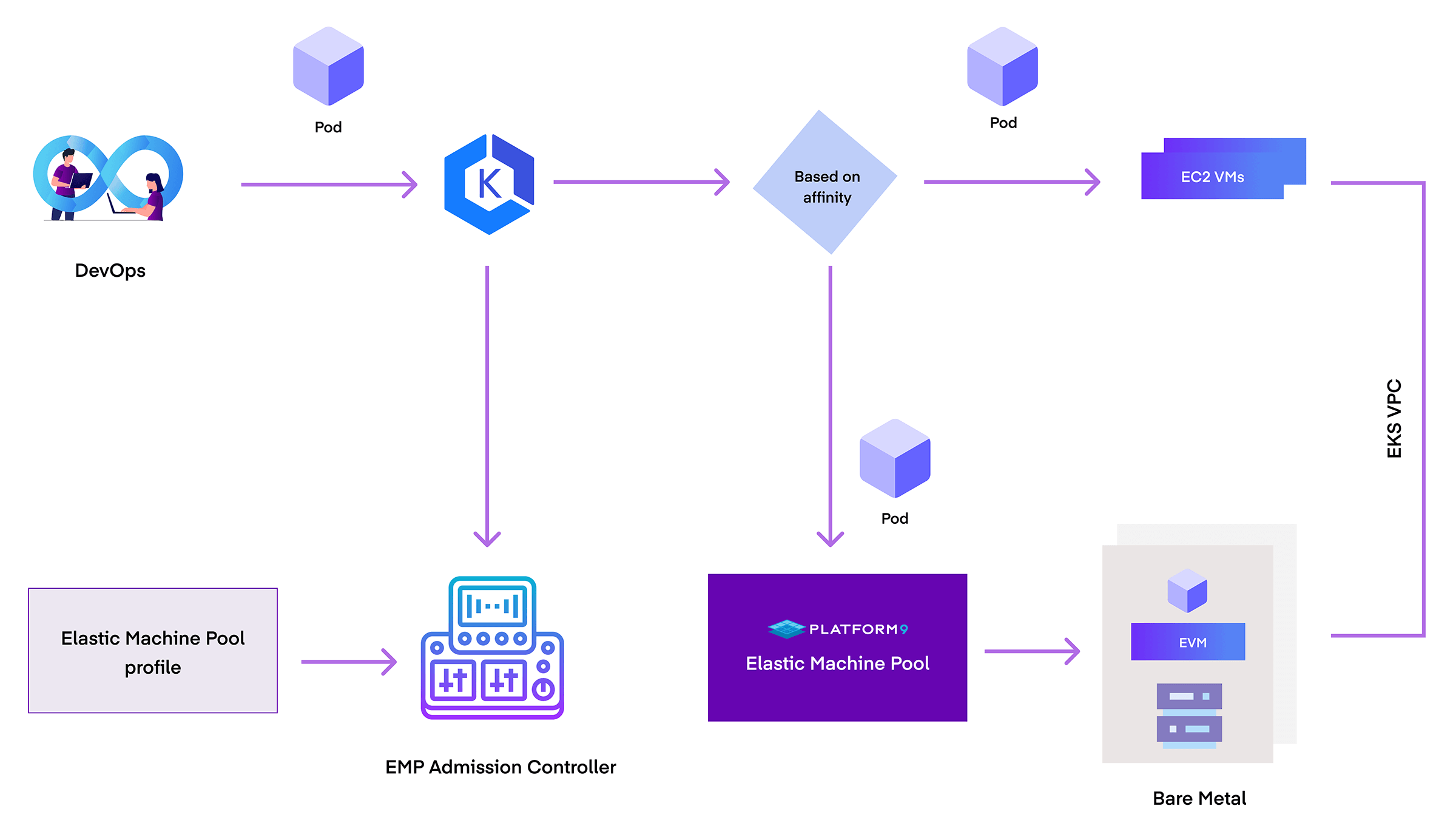

EMP architecture: A high-level overview

EMP employs a combination of virtualization technologies and other Kubernetes extensions to provide a seamless experience. The following diagram shows a high-level architecture for a given customer environment.

EKS currently provides two compute engines:

- EC2 instances: whether on-demand, reserved or spot, they are part of the same computing capacity type.

- Fargate: A serverless computing engine that hides ‘nodes’ of the cluster by managing them internally.

EMP is the new computing engine for EKS, which is far more cost-effective than either EC2 or Fargate. How? It utilizes proven virtualization technologies. Instead of creating EC2 VMs, it uses EC2 Bare Metal instances to create Elastic VMs (EVM) that can be joined to EKS as ‘workers’. These EVMs leverage the same AWS infrastructure as EC2 VMs and have access to all other AWS infrastructure services like VPC, EBS, EFS, RDS, etc. Pods run on EVMs just like they run on EC2 VMs.

EVMs have a few advantages:

- EVMs can be overprovisioned on a small cluster of bare-metal servers. Overprovisioning allows allocating more virtual resources than were physically available since not all VMs use maximum resources concurrently.

- EVMs are continuously bin-packed onto bare metal servers using EMP live migration and auto-scaling technologies. Live migration enables seamlessly moving VMs between physical hosts without disruption.

- EMP is designed to be AWS infrastructure compatible, which means no changes in the usage of AWS services like EBS, EFS, VPC, etc. EMP is compatible with EKS providing a seamless experience. Developers do not need to change their applications or learn any new tools or APIs. They do not even need to change the requests and limits for their apps anymore. EMP automatically optimizes in real time to ensure app performance and SLAs.

This results in best-in-class resource utilization and cost benefits.

This approach is unique in the industry, solving the problem at the root cause layer (bare metal and virtualization) rather than trying to fix the symptoms. EMP obviates the need to right-size at the pod or VM layer. This enables a level of efficiency and cost optimization not possible with existing tools and approaches.

Why EKS Clusters need EMP

The benefits of integrating EMP into EKS are manifold:

- Unmatched Efficiency: EMP directly targets and rectifies inefficiencies in EKS VM instances at the hypervisor layer.

- Seamless Integration: EMP is tailor-made for EKS, ensuring developers face no changes in their workflow.

- Non-disruptive Operations: Thanks to virtualization, EKS applications remain stable during any infrastructural optimizations.

- Broad Applicability: While EMP is optimized for EKS, its methodologies will be adapted for plain EC2 instances and will be extended to other public cloud platforms, amplifying its benefits.

In summary, as EKS continues to shape the future of container orchestration, maximizing its utilization is of paramount importance. Platform9’s EMP offers an avant-garde approach, ensuring businesses extract the utmost value from their EKS investments.

Dig Deeper

- Read the technical documentation on EMP

- Watch this EMP demo video that showcases how EMP instance and pod live migration works

- Check out the EMP product web page

- Learn how a SaaS company slashed 58% in EKS costs by using EMP

Try EMP for yourself – it takes minutes to start

EMP is completely free to try and easy to get started. We’ve invested a lot of effort in integrating seamlessly with your existing EKS environment, and enabling you to incrementally test and migrate your pods on EMP EVMs. You can start by connecting a cluster and instantly see how much EMP may save for you. We guarantee that it will be a lot more than any other EKS cost optimization tool you’ve tried so far. Start free and try for yourself.

Or book a personalized demo and let our team walk you through a live demo session, giving you a personalized ROI with EMP for your environment.

We’d love to hear your feedback. Reach out to us anytime with your questions or comments.